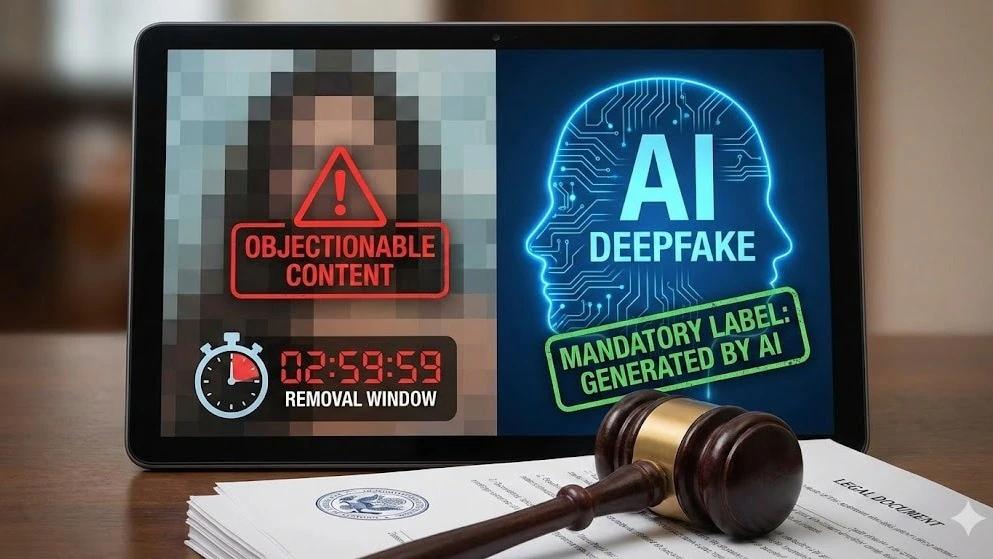

India Tightens Synthetic Content Rules, Mandates Faster Takedown of AI-Generated and Deepfake Material

- bykrish rathore

- 16 February, 2026

India has moved to significantly tighten its regulatory framework around synthetic and AI-generated content, introducing stricter compliance requirements for online platforms and faster takedown timelines for harmful deepfakes. The updated provisions aim to address the growing misuse of artificial intelligence tools that can create hyper-realistic manipulated videos, audio clips, and images capable of spreading misinformation, damaging reputations, and influencing public opinion.

With generative AI technologies becoming widely accessible, policymakers have expressed concern over the rapid rise of deepfake incidents. These include fake political speeches, manipulated celebrity videos, financial scams, and even non-consensual content targeting private individuals. The government’s new measures are designed to ensure that digital intermediaries act swiftly once such material is flagged.

Under the revised compliance framework, social media platforms and digital intermediaries are required to remove or disable access to AI-generated or deepfake content within a significantly reduced timeframe after receiving valid complaints. The rules also emphasize proactive monitoring mechanisms and stronger grievance redressal systems to prevent the viral spread of harmful synthetic material.

A key focus of the regulation is accountability. Platforms must now demonstrate due diligence in identifying manipulated media, implementing labeling mechanisms where applicable, and cooperating with law enforcement agencies. Failure to comply could result in penalties, legal consequences, or loss of safe-harbor protections that shield intermediaries from liability.

The move reflects India’s broader push to create a safer digital ecosystem while balancing innovation and free expression. Authorities have clarified that the intent is not to stifle legitimate AI innovation, creative expression, or satire, but to prevent malicious use cases that threaten public order, electoral integrity, and individual privacy.

Cybersecurity experts have welcomed the clarity in timelines, noting that speed is critical in dealing with viral misinformation. Deepfakes, particularly during elections or crisis situations, can spread rapidly across platforms, making early intervention crucial. At the same time, digital rights advocates have called for transparent enforcement mechanisms to ensure that takedown powers are not misused or applied arbitrarily.

India’s updated stance aligns with global efforts to regulate artificial intelligence responsibly. Several countries are exploring similar frameworks to manage risks associated with generative AI, including watermarking requirements, disclosure norms, and stricter platform obligations.

As AI capabilities continue to evolve, regulatory frameworks are expected to adapt further. The latest move signals that India is taking a proactive approach to digital governance—seeking to safeguard citizens from manipulation while encouraging responsible innovation. The effectiveness of these measures, however, will ultimately depend on robust enforcement, technological readiness of platforms, and public awareness about identifying synthetic content.

In an era where seeing is no longer believing, India’s tightened rules mark a significant step toward preserving trust in digital information and protecting users from the potential harms of AI-driven deception.

Note: Content and images are for informational use only. For any concerns, contact us at info@rajasthaninews.com.

TSMC Optimistic Amid...

Related Post

Recent News

Daily Newsletter

Get all the top stories from Blogs to keep track.

_1770783907.jpg)

_1771228179.jpg)

_1771227703.jpg)